Every NAAC cycle starts with confidence.

Committees are re-formed.

Departments are instructed to submit updated information.

IQAC teams begin intense coordination across the institution.

Yet despite months of preparation, many universities exit the accreditation process with the same quiet frustration:

“We did everything right. Why didn’t the score reflect it?”

The uncomfortable truth is this:

NAAC outcomes rarely disappoint because institutions misunderstand the framework.

They disappoint because institutions overestimate the strength of their data foundation.

Until leadership recognises this gap, accreditation will continue to feel stressful, unpredictable, and disproportionately demanding—no matter how committed the teams involved.

NAAC Is Not an Event You Prepare For

One of the most persistent myths in higher education governance is that NAAC is an event.

In reality, NAAC is a mirror.

Peer teams are not evaluating how efficiently documents were assembled over a few months. They are observing whether academic intent, execution, monitoring, and outcomes align over multiple years.

Institutions that treat NAAC as a documentation exercise inevitably struggle. Those that treat it as a governance outcome experience far less disruption.

This difference becomes evident when examining why legacy campus platforms fail to provide institutional clarity, a challenge clearly explained in Why Traditional University ERPs Struggle with Institutional Visibility — and How Modern Platforms Are Architected Differently.

When systems are built for transactions rather than academic evidence, accreditation exposes the gap mercilessly.

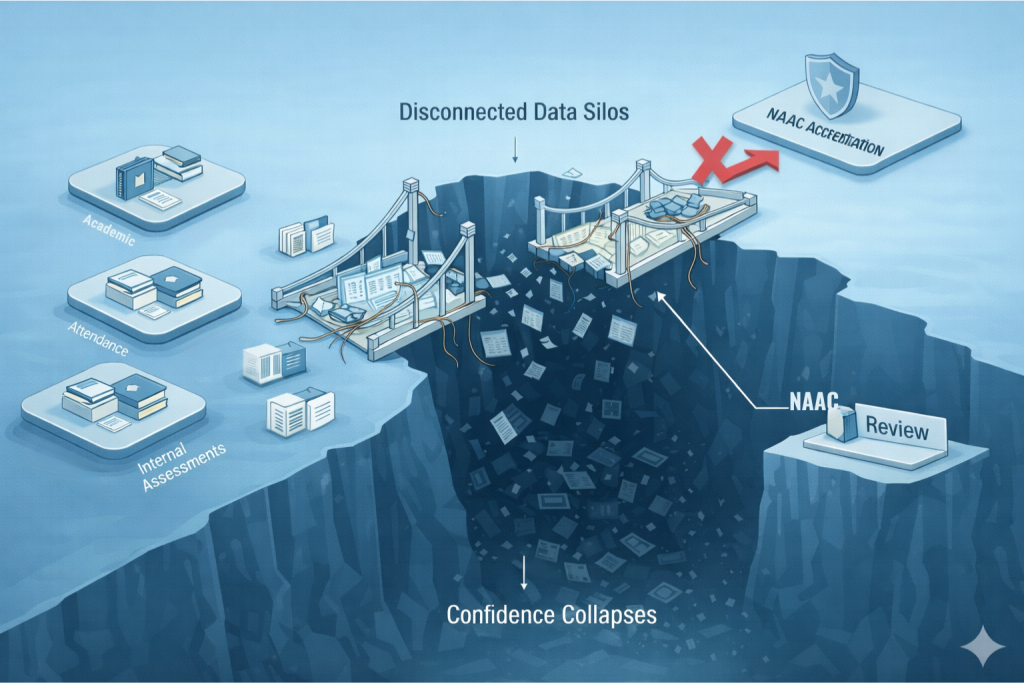

Where Accreditation Actually Breaks Down

Accreditation does not fail during the peer visit.

It breaks down much earlier—inside daily academic and administrative workflows.

Every institution generates data continuously:

- Teaching plans

- Attendance records

- Internal assessments

- Learning outcomes

- Student feedback

- Action-taken reports

The problem is not data creation.

The problem is data continuity.

When these elements live in silos, are maintained independently by departments, and reconciled manually only during accreditation cycles, confidence collapses.

This is precisely why accreditation bodies focus on institutional processes rather than isolated evidence, as outlined in How Accreditation Bodies Evaluate Higher Education Institutes for Quality Assurance.

“We Have the Data” Is the Most Dangerous Assumption

Almost every university believes it has the data NAAC requires.

Technically, this is true.

But NAAC does not assess whether data exists.

It assesses whether data is reliable, traceable, and defensible.

Reliable data means:

- Metrics are defined consistently across departments

- Numbers can be traced back to academic actions

- Evidence holds across academic cycles

- No individual “fixes” data under pressure

When IQAC teams must reconcile multiple versions of the same metric, leadership is forced to rely on trust instead of verification. At that point, accreditation outcomes become uncertain—regardless of effort.

Why Documentation-First NAAC Preparation Is Structurally Weak

The conventional NAAC preparation model follows a familiar pattern:

- Collect departmental data

- Standardise formats

- Resolve inconsistencies manually

- Draft criterion-aligned narratives

- Validate with leadership

This approach fails because documentation is being asked to repair fragmented data.

Documentation cannot do that.

Accreditation-ready evidence must be produced as a natural by-product of academic operations—not assembled retrospectively under pressure.

This philosophy underpins modern Digital solutions for higher education, particularly platforms designed to automate evidence continuity rather than reporting effort, as explained in A Guide on Various Aspects of Accreditation and How iCloudEMS Automates the Groundwork of Accreditation.

NAAC Is a Governance Stress Test

Viewed objectively, NAAC is not merely a compliance exercise.

It is a governance stress test.

Implicitly, it asks leadership:

- Can you see institutional performance clearly?

- Can decisions be defended with evidence?

- Can improvement cycles be demonstrated over time?

- Can data speak without reinterpretation?

These are governance capabilities, not clerical ones.

This shift in thinking explains why leadership now evaluates platforms as governance infrastructure rather than simple software tools, a perspective discussed in How University Leaders Should Evaluate an Education Management System (EMS).

The Registrar’s Invisible Risk Load

No role experiences accreditation pressure more acutely than the Registrar.

During NAAC cycles, the Registrar becomes:

- The final checkpoint for conflicting data

- The escalation point for unresolved inconsistencies

- The institutional guarantor of accuracy

When systems are fragmented, every query requires manual reconciliation. Every reconciliation increases dependency on individuals and introduces institutional risk.

This is not a workload issue.

It is a system-architecture issue.

In a mature Education Management System (EMS), the Registrar validates data rather than repairing it—dramatically reducing exposure during audits.

Evidence-Assembled vs Evidence-Ready Institutions

After observing accreditation outcomes across institutions, a clear pattern emerges.

Evidence-Assembled Institutions

- Data compiled only when required

- Heavy reliance on spreadsheets

- High dependency on individuals

- Elevated audit stress

- NAAC perceived as disruption

Evidence-Ready Institutions

- Data generated continuously

- System-validated records

- Minimal individual dependency

- Calm audit environments

- NAAC perceived as confirmation

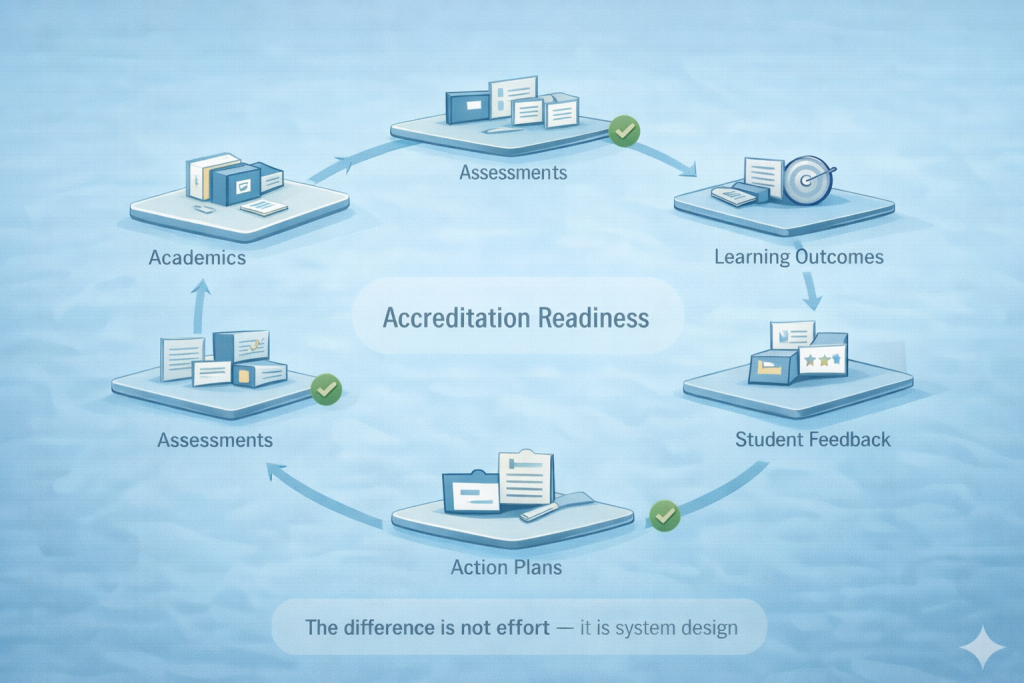

The difference is not effort or intent.

It is system design.

Why ERP Thinking No Longer Supports Accreditation

Many institutions still search for “college ERP” or “university ERP software.” This reflects historical market language, not present-day requirements.

Traditional ERP systems were designed to manage transactions—fees, payroll, inventory—not academic outcomes, assessment mapping, or accreditation intelligence.

This maturity gap is analysed in University ERP in India: Why Most Systems Never Reach Institutional Maturity.

A modern Education Management System (EMS) goes beyond ERP logic by connecting:

- Academics to assessments

- Assessments to outcomes

- Outcomes to feedback

- Feedback to action plans

- Action plans to leadership decisions

This is the architecture NAAC implicitly evaluates.

Early Awareness Changes Accreditation Outcomes

Institutions that perform confidently during NAAC cycles share one trait: they are rarely surprised.

Issues are identified early—long before they escalate into audit findings.

AI-driven early awareness systems detect:

- Attendance anomalies

- Academic risk patterns

- Assessment inconsistencies

- Compliance drift

This proactive approach is detailed in AI in Universities Is Not About Automation — It’s About Early Awareness.

Accreditation improves not because AI generates documents, but because it prevents data decay.

Where iCloudEMS Fits—Quietly but Structurally

The purpose of iCloudEMS is not to impose additional processes or cultural disruption.

It exists to:

- Align academic workflows with outcomes

- Maintain evidence continuity across years

- Embed accreditation readiness into daily operations

- Support leadership with decision-grade insights

As a cloud-native, AI-powered Education Management System (EMS), iCloudEMS strengthens governance quietly—so accreditation reflects reality, not last-minute preparation.

Why Less Anxiety Often Produces Better NAAC Scores

This is the paradox leadership often overlooks.

Institutions that worry less about NAAC tend to perform better.

Because quality is not created under pressure.

It is revealed under review.

Frequently Asked Leadership Questions

Is NAAC primarily about documentation or data systems?

Documentation matters only when backed by consistent, traceable data generated through daily academic operations.

Can spreadsheets still support accreditation?

They may work temporarily, but they increase dependency, inconsistency, and audit risk as institutions grow.

Is ERP sufficient for NAAC readiness?

Traditional ERP systems were not designed for outcome mapping, evidence continuity, or accreditation intelligence.

When should NAAC preparation actually begin?

NAAC readiness should be continuous and embedded into governance, not initiated close to assessment cycles.

Does technology alone improve NAAC scores?

No. Governance discipline supported by the right Education Management System (EMS) does.

What is leadership’s role in data governance?

Leadership must demand clarity, not just reports. Systems should support decision-making, not paperwork.

Is NAAC becoming stricter?

NAAC is becoming more data-driven. Transparency exposes inconsistencies faster than ever before.

A Final Reflection for Institutional Leaders

If NAAC feels exhausting,

the framework is not the problem.

The fragility of data truth is.

Strengthen the data foundation, and accreditation stops being a struggle—and starts becoming validation.

If you’ve experienced this challenge—or solved it differently—your perspective matters.

What has NAAC revealed about your institution’s data reality?